Inflation is climbing, however not alarmingly… a September reduce is anticipated… is AI about to start out disrupting different AI?… the businesses most in danger right now… the place to take a position to keep away from the disruption

This morning’s Shopper Worth Index (CPI) report got here in on the nice and cozy facet, however not scorching sufficient to derail the market’s expectation for a September charge reduce.

Costs in July rose 0.2% for the month and a couple of.7% for the yr. Whereas that month-to-month determine matched estimates, the two.7% yearly quantity was beneath the two.8% forecast.

Core CPI, which strips out unstable meals and power costs, was barely hotter.

On the month, core CPI climbed 0.3%, translating to a yearly rise of three.1%. Once more, that 0.3% studying matched forecasts, however the 3.1% yearly studying got here in simply above the estimate of three.0%.

So, did the tariff-based inflation boogeyman present up or not?

In some locations, sure.

For instance, furnishings costs rose 0.9% in July and are 3.2% larger than a yr in the past. Shoe costs jumped 1.4%. And family furnishings and provides rose 0.7%.

However whereas some items costs are rising as a result of tariffs, we’re not seeing widespread runaway inflation.

General, that is extra of a slow-burn inflation, not a surge. It’s persistent sufficient to maintain the Fed cautious, however not scorching sufficient to close the door on charge cuts, particularly with labor market softness coming into focus.

If something, right now’s CPI information strengthened the expectation for a charge reduce.

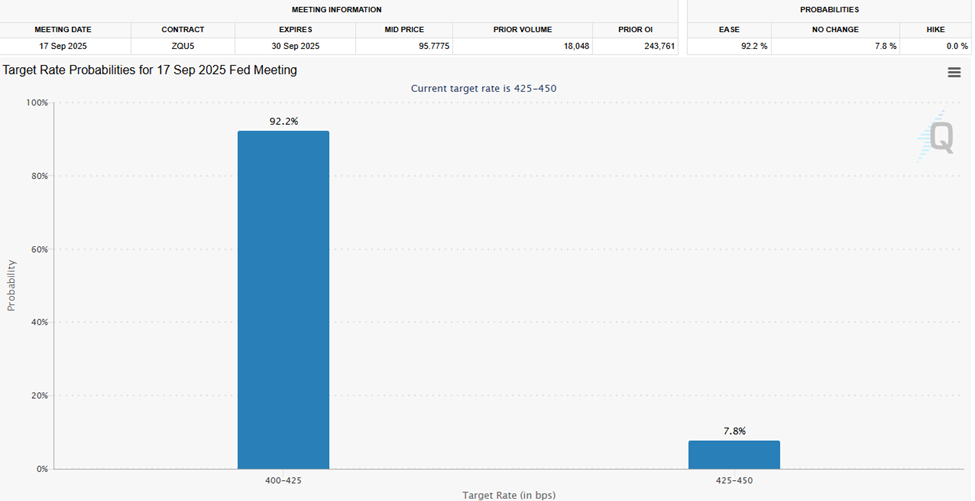

We see this by trying on the CME Group’s FedWatch Software. This reveals us the possibilities that merchants are assigning completely different fed funds goal charges at dates sooner or later.

Yesterday, merchants put 85.9% odds on a quarter-point reduce subsequent month.

Within the wake of this morning’s report, that chance has jumped to 92.2%.

Supply: CME Group

Whereas “hotter inflation” equals “a charge reduce” may appear counterintuitive, the pondering goes:

- Current disappointing labor market information presents the higher danger right now than this gradual rise in inflation

- Immediately’s CPI information goes within the mistaken course, however it’s not scorching sufficient to discourage a reduce

- It’s nonetheless unclear whether or not we’re seeing ongoing inflation slightly than a “one time” bump larger in costs as a result of tariffs

So, put all of it collectively and we stay “all programs go” for a September reduce.

The brand new wave of artistic destruction is hurtling towards us

Final week, ChatGPT-5 dropped – free to all customers – and our know-how knowledgeable Luke Lango calls it “a monster.”

From Luke’s Each day Notes in Innovation Investor:

It simply tied for first place on the Synthetic Evaluation Intelligence Index – a composite rating throughout eight separate AI intelligence evaluations – matching Grok 4’s efficiency.

The twist? Grok 4 wanted 100 million tokens to get there. ChatGPT-5 did it with 43 million – lower than half the compute.

Translation: AI isn’t simply getting smarter. It’s getting sooner, cheaper, and extra modular.

That type of leap might speed up AI adoption throughout industries, opening the door to a wave of superior purposes.

Sounds nice, proper?

Sure and no.

Whereas it’s nice for the tip customers harnessing this unimaginable artistic energy, it represents destruction for a brand new wave of firms – together with some AI firms – which might be abruptly susceptible to obsolescence.

Again to Luke:

When foundational fashions get this good, lower-tier AI merchandise get squeezed.

If ChatGPT-5 or Grok 4 can spin up a completely practical web site in minutes, what occurs to firms like Wix(WIX) or GoDaddy (GDDY)?

To Luke’s level, if a general-purpose AI can generate a customized, functioning web site in minutes – full with design, copy, search engine and AI optimization, and e-commerce – what’s left for Wix and GoDaddy to supply past area registration?

We’ve opened Pandora’s Field

The identical artistic destruction might spill over into graphic design instruments (assume Canva) if/when these AI “super-models” can produce agency-quality branding in seconds.

It even hits advertising automation software program if/when campaigns could be strategized, written, and optimized by a number of AI prompts.

Take into consideration coding platforms like GitHub Copilot, or freelance marketplaces like Fiverr…

If a super-model like ChatGPT-5 can ship production-ready code or artistic belongings with minimal human oversight, will these ecosystems shift fully to AI-assisted high quality management? At greatest, they shrink considerably, shedding human staff.

Even enterprise software program isn’t immune. It’s attainable that productiveness suites, CRM platforms, or area of interest SaaS instruments may very well be decreased to options inside a mega-AI interface. Simply contemplate the way in which the smartphone absorbed calculators, cameras, and GPS units… to not point out house telephones and land traces.

Behind all that is one crucial query for buyers…

How will we separate firms that may combine and journey the AI super-model wave – from these whose solely protection is that the wave hasn’t hit them but?

Earlier than we will reply that successfully, we have to higher perceive what this wave is, and what it represents.

Stepping again, this newest evolution of enormous language fashions (LLMs) is unbelievably highly effective. However now contemplate what occurs when all that functionality turns into localized.

Again to Luke to elucidate:

AI is leaving the cloud. Crawling off the server racks. And moving into the bodily world. And when that occurs, every little thing modifications.

As a result of bodily AI can’t depend on 500-watt datacenter GPUs. It might probably’t wait 300 milliseconds for a spherical journey to a hyperscaler.

It must be:

- All the time on

- Instantaneous

- Battery-powered

- Offline-capable

- Personal

- Low-cost

It wants SLMs: compact, fine-tuned, hyper-efficient fashions constructed for mobile-class {hardware}.

To verify we’re all on the identical web page, LLMs are the huge, compute-hungry engines educated on lots of of billions (or trillions) of parameters. They’re highly effective, however costly to run and are sometimes overkill for particular duties.

SLMs – or “small language fashions” – in contrast, are lean, task-focused fashions that may be fine-tuned to carry out at near-LLM high quality for a fraction of the price, power, and {hardware} necessities. Some may even run regionally on a laptop computer or smartphone.

So, with an SLM, “AI” is not a cloud-based service you log into. As an alternative, it’s woven into the material of your on a regular basis gadget and routine workflow.

Luke writes that SLMs are in Apple’s upgraded Siri, Meta’s new Orion sensible glasses, and Telsa’s Optimus robots.

What occurs when the facility of LLMs meets the precision of SLMs?

If SLMs start to dominate inside the wider AI area, we’ll see a large shift within the taking part in subject.

Again to Luke:

SLMs don’t require information facilities. They don’t want $30,000 accelerators. They don’t eat 50 megawatts of cooling. They don’t even depend on OpenAI’s API.

All they want is environment friendly edge compute, a battery, and a objective. And that modifications every little thing.

The middle of gravity in AI shifts – from cloud-based GPUs and coaching infrastructure to edge silicon, native inference, and deployment tooling.

So, whereas LLMs like ChatGPT are grabbing the headlines, SLMs could also be the true brokers of disruption – quietly dissolving enterprise fashions and spawning new ones of their wake.

The winners would be the firms that determine the best way to personal the distribution layer earlier than everybody else.

An instance to drive this house

Take Zoom.

Immediately, dwell transcription, assembly summaries, and language translation all occur within the cloud. This prices time, bandwidth, and cash.

In tomorrow’s world of SLM dominance, those self same options might run immediately in your laptop computer and even your telephone. No web lag time, no subscription.

Think about opening your MacBook and the built-in “AI Assembly Assistant” does every little thing Zoom as soon as charged for. In the meantime, Microsoft Groups or Google Meet integrates even higher regionally powered AI.

In a single day, Zoom’s core differentiators vanish. They’re changed by default options from firms that already personal the working system or productiveness suite.

In different phrases, an SLM turns Zoom – a billion-dollar SaaS big – right into a pre-installed checkbox.

And slightly than layoffs, we get an “out of enterprise” signal.

One technique to make investments on this shift proper now

If SLMs characterize the brains of the subsequent wave of AI, then robotics and “bodily AI” are the our bodies that these brains will inhabit.

Shrinking fashions from sprawling, cloud-bound LLMs to nimble, on-device SLMs doesn’t simply reduce prices. It makes intelligence cell.

Out of the blue, you don’t want a warehouse of servers to energy a robotic’s decision-making. You’ll be able to embed superior reasoning straight into the machine itself.

And this unlocks a floodgate of potentialities:

- Drones that navigate with out fixed cloud connection…

- Warehouse bots that adapt on the fly…

- Humanoid assistants that function effectively in actual time.

That is the place digital intelligence meets mechanical functionality – and it’s going to alter our day-to-day world.

How will we get forward of it?

Final week, Luke – together with Louis Navellier and Eric Fry – launched their newest collaborative funding analysis bundle. It’s about the best way to place your self right now for the approaching period of Bodily AL.

Their Day Zero Portfolio holds the seven shares they’ve recognized as best-of-breed in AI-powered robotics, offering focused publicity to the subsequent wave of AI exponential progress.

Circling again to SLMs, I’ll give Luke the ultimate phrase:

“Small fashions” don’t make headlines. However they’re what is going to drive earnings—as a result of they’re what is going to scale synthetic intelligence to a trillion units and embed it into the on a regular basis material of human life.

SLMs aren’t only a extra environment friendly different to large cloud-based AI. They’re the important thing to taking synthetic intelligence off the server racks and into the true world…

Briefly: SLMs unlock the period of “bodily AI.”

Have a superb night,

Jeff Remsburg