Everybody’s watching the mistaken AI growth.

Whereas Wall Avenue and Silicon Valley obsess over when ChatGPT-5 will launch—or what number of exaflops xAI is hoarding—they’re lacking the actual earthquake.

It’s already rumbling beneath the floor. And it’s about to crack the foundations of the AI world and reorder the whole semiconductor provide chain.

That quake? The silent, seismic shift from Massive Language Fashions (LLMs) to Small Language Fashions (SLMs).

And it’s not theoretical. It’s taking place now.

AI is leaving the cloud. Crawling off the server racks. And moving into the bodily world.

Welcome to the Age of Bodily AI

If the previous 5 years of AI have been about huge brains within the cloud that might move the bar examination and write poetry, the following 5 shall be about billions of tiny, embedded brains powering real-world machines.

Cleansing your home. Working your automotive. Cooking your dinner. Whispering insights by means of your glasses.

That is AI going bodily. And when that occurs, every thing modifications.

As a result of bodily AI can’t depend on 500-watt datacenter GPUs.

It might’t wait 300 milliseconds for a spherical journey to a hyperscaler.

It must be:

- At all times on

- Instantaneous

- Battery-powered

- Offline-capable

- Non-public

- Low-cost

And which means it could’t run GPT-4.

It wants SLMs—compact, fine-tuned, hyper-efficient fashions constructed for mobile-class {hardware}.

SLMs aren’t backup singers to LLMs.

On the earth of edge AI, they’re the headliners.

The brand new AI revolution received’t be televised.

It’ll be embedded. In all places.

The SLM Invasion Has Already Begun

You might not have seen it but—as a result of the businesses deploying small language fashions usually are not bragging about billions of parameters or trillion-token coaching units.

They’re delivery merchandise.

Apple’s (AAPL) upgraded Siri? Runs on an on-device SLM.

Meta’s (META) Orion sensible glasses? Powered by domestically deployed SLMs.

Tesla’s (TSLA) Optimus robotic? Virtually actually pushed by an ensemble of SLMs educated on slender duties like folding laundry and opening doorways.

This isn’t a distinct segment development.

It’s the starting of the good decentralization of synthetic intelligence—from monolithic, cloud-based compute fashions to light-weight, distributed intelligence on the edge.

If giant language fashions have been the mainframe period of AI, SLMs are the smartphone revolution. And identical to in 2007, most incumbents don’t see the freight prepare coming.

To be clear: LLMs are exceptional—however they aren’t scalable.

You can’t put a 70-billion-parameter mannequin in a toaster. You can’t run GPT-4 on a drone.

SLMs, against this, are purpose-built for the sting. They:

- Function at sub-100 millisecond latency on mobile-class chips

- Match into only a few gigabytes of RAM

- Ship dependable efficiency for 90% of AI agent duties (instruction following, instrument use, commonsense reasoning)

- Will be fine-tuned at low value for slender functions

They aren’t omniscient.

They’re the blue-collar AI that will get the job accomplished.

And in a world that wants AI brokers in vehicles, robots, glasses, home equipment, manufacturing traces, kiosks, and wearables—reliability and value will beat generality and magnificence each single time.

The Funding Implications: GPU Utopia Cracks

Now right here is the place it will get attention-grabbing.

For the previous two years, the core AI funding thesis has been easy:

“Purchase Nvidia (NVDA) and something tied to GPUs—as a result of giant language fashions are consuming the world.”

That thesis held up. Till now.

If small language fashions start to dominate AI deployment, the mannequin begins to interrupt down.

Why?

As a result of SLMs don’t require knowledge facilities. They don’t want $30,000 accelerators. They don’t devour 50 megawatts of cooling. They don’t even depend on OpenAI’s API.

All they want is environment friendly edge compute, a battery, and a goal.

And that modifications every thing.

The middle of gravity in AI shifts—from cloud-based GPUs and coaching infrastructure to edge silicon, native inference, and deployment tooling.

This doesn’t imply Nvidia loses.

It means the following trillion {dollars} in worth might accrue someplace else.

The New Infrastructure Stack for Bodily AI

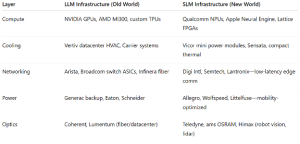

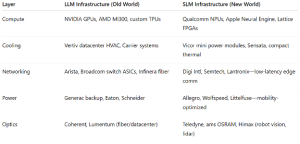

Let’s get particular. The LLM world runs on one form of infrastructure. The SLM world wants a fully totally different stack.

Critically, SLMs are cheap to duplicate and don’t require fixed API calls to operate.

That could be a direct risk to the rent-seeking software-as-a-service (SaaS) AI mannequin—however a strong tailwind for gadget authentic gear producers (OEMs) and edge compute companies.

And based mostly on the chart above, you can begin to see how this tectonic shift might play out throughout public markets.

Qualcomm (QCOM) seems like a serious winner. Its Snapdragon AI platform already runs many SLMs. It’s the ARM of the sting AI world.

Lattice Semiconductor (LSCC) might additionally profit. The corporate produces tiny FPGAs—supreme for AI logic in low-power robots and embedded sensors.

Ambarella (AMBA) is one other potential standout, with its AI imaginative and prescient SoCs utilized in robotics, surveillance, and autonomous automobiles.

Among the many Magnificent Seven, Apple (AAPL) seems particularly nicely positioned. Its Neural Engine will be the most generally deployed small AI chip on the planet.

Vicor (VICR) additionally deserves point out. It produces energy modules optimized for tight thermal and energy envelopes—key to edge AI techniques.

On the opposite facet of the ledger, a number of beloved AI winners might discover themselves on the mistaken facet of this transition.

Tremendous Micro (SMCI) could also be weak if inference shifts away from knowledge facilities and server demand softens.

Arista Networks (ANET) might face strain as knowledge middle networking turns into much less crucial.

Vertiv (VRT) would possibly see development flatten if hyperscale HVAC demand slows.

Generac (GNRC) could also be uncovered to declining demand for backup energy if the SLM development reduces reliance on centralized compute.

That is how paradigm shifts occur.

Not in a single day—however quicker than most incumbents count on. And with billions in capital rotation alongside the way in which.

Construct a Portfolio for the SLM Age

Should you consider AI is shifting from “textual content prediction within the cloud” to bodily intelligence on the planet, then your portfolio must replicate that.

As a substitute of chasing the identical three AI megacaps everybody owns, give attention to:

- Edge chipmakers

- Embedded inference specialists

- Optics and sensing suppliers

- Energy administration innovators

- Robotics element suppliers

The mega-cap GPU commerce isn’t useless. However it’s not the one recreation on the town anymore.

Ultimate Phrase

The explanation this story isn’t all over the place but is straightforward: it doesn’t sound flashy.

“Small fashions” don’t make headlines. However they’re what is going to drive earnings—as a result of they’re what is going to scale synthetic intelligence to a trillion units and embed it into the on a regular basis material of human life.

The following wave of AI won’t be a couple of single god-model ruling from the cloud.

It will likely be powered by hundreds of thousands of specialised, native fashions—every performing slender duties quietly, reliably, and effectively.

That’s the place the expansion is. That’s the place the infrastructure buildout is headed. And that’s the place buyers needs to be positioning now—earlier than Wall Avenue catches up.

So, the place does this all lead?

Straight into what famend futurist Eric Fry calls the Age of Chaos—a high-stakes period outlined by converging disruptions in tech, geopolitics, and the economic system that might make—or break—fortunes.

Fry isn’t any stranger to this type of second. He has picked greater than 40 shares that went on to soar 1,000% or extra, efficiently navigating each bull and bear cycles.

Now he’s again with what could also be his most pressing name of the last decade.

Fry simply launched his “Promote This, Purchase That” blueprint for navigating as we speak’s AI-fueled mania. Inside, he names seven tickers—4 he says to promote instantly, and three he believes might ship life-changing upside:

- A little bit-known robotics agency whose income has surged 15x since 2019—and one Fry says might outmaneuver Tesla within the race for bodily AI

- A stealth e-commerce firm he believes might turn into the following Amazon—with 700% development potential

- A safer AI play that might outperform Nvidia (NVDA) whereas defending capital

He’s freely giving all three names and the analysis behind them—free.

Click here to access Eric Fry’s “Sell This, Buy That” trades for the Age of Chaos.

The AI growth is actual. Earnings are hovering. However not all tech stocks are constructed to outlive the following part.

Fry believes the following 12 to 24 months may very well be probably the most risky of our lifetimes.

And this can be your greatest likelihood to get positioned earlier than the brand new financial order takes form.

On the date of publication, Luke Lango didn’t have (both instantly or not directly) any positions within the securities talked about on this article.

P.S. You possibly can keep on top of things with Luke’s newest market evaluation by studying our Each day Notes! Take a look at the newest problem in your Innovation Investor or Early Stage Investor subscriber website. Questions or feedback about this problem? Drop us a line at langofeedback@investorplace.com.